The Ordering Service

本文档来自The Ordering Service,有所简化。

What is ordering?

Many distributed blockchains, such as Ethereum and Bitcoin, are not permissioned, which means that any node can participate in the consensus process, wherein transactions are ordered and bundled into blocks. Because of this fact, these systems rely on probabilistic consensus algorithms which eventually guarantee ledger consistency to a high degree of probability, but which are still vulnerable to divergent ledgers (also known as a ledger “fork”), where different participants in the network have a different view of the accepted order of transactions.

许多的分布式区块链系统,例如以太坊和比特币都是不需要认可的;这意味着任何节点都可以参与共识过程,这个共识就是把交易排序并打包成区块。由于这个事实,这些系统依赖于概率共识算法(目前是Pow共识算法),该算法最终可以确保分类帐的一致性具有很高的概率,但是它们仍然容易收到分叉的影响,在分叉的分类账中,网络中的不同参与者对接受的交易顺序有不同的看法。

Hyperledger Fabric works differently. It features a node called an orderer (it’s also known as an “ordering node”) that does this transaction ordering, which along with other orderer nodes forms an ordering service. Because Fabric’s design relies on deterministic consensus algorithms, any block validated by the peer is guaranteed to be final and correct. Ledgers cannot fork the way they do in many other distributed and permissionless blockchain networks.

Hyperledger Fabric的工作方式有所不同。它具有一个称为“排序者”的节点(也称为“排序节点”)来执行此交易的排序,该节点与其他排序节点一起构成排序服务。由于Fabric的设计依赖于确定性共识算法,因此可以保证peer验证的任何块都是最终的和正确的。账本也不会像以太坊和比特币那样出现分叉。

In addition to promoting finality, separating the endorsement of chaincode execution (which happens at the peers) from ordering gives Fabric advantages in performance and scalability, eliminating bottlenecks which can occur when execution and ordering are performed by the same nodes.

除了促进不可变性之外,将链码执行的背书(在同级中发生)与排序分开可以使Fabric在性能和可伸缩性方面具有优势,消除了由相同节点执行和排序时可能发生的瓶颈。

Orderer nodes and channel configuration

In addition to their ordering role, orderers also maintain the list of organizations that are allowed to create channels. This list of organizations is known as the “consortium”, and the list itself is kept in the configuration of the “orderer system channel” (also known as the “ordering system channel”). By default, this list, and the channel it lives on, can only be edited by the orderer admin. Note that it is possible for an ordering service to hold several of these lists, which makes the consortium a vehicle for Fabric multi-tenancy.

除了作为排序的角色之外,orderers还维护允许创建通道的组织的列表。这个组织的列表被称为“联盟”,并且这个列表被保存在 “orderer system channel” 的配置中。默认情况下,此列表及其所处的通道只能由orderer的管理员进行编辑。

Orderers also enforce basic access control for channels, restricting who can read and write data to them, and who can configure them. Remember that who is authorized to modify a configuration element in a channel is subject to the policies that the relevant administrators set when they created the consortium or the channel. Configuration transactions are processed by the orderer, as it needs to know the current set of policies to execute its basic form of access control. In this case, the orderer processes the configuration update to make sure that the requestor has the proper administrative rights. If so, the orderer validates the update request against the existing configuration, generates a new configuration transaction, and packages it into a block that is relayed to all peers on the channel. The peers then process the configuration transactions in order to verify that the modifications approved by the orderer do indeed satisfy the policies defined in the channel.

Orderers还对通道实施基本访问控制,限制谁可以向通道读取和写入数据以及谁可以对其进行配置。

Orderer nodes and identity

Everything that interacts with a blockchain network, including peers, applications, admins, and orderers, acquires their organizational identity from their digital certificate and their Membership Service Provider (MSP) definition.

For more information about identities and MSPs, check out our documentation on Identity and Membership.

Just like peers, ordering nodes belong to an organization. And similar to peers, a separate Certificate Authority (CA) should be used for each organization. Whether this CA will function as the root CA, or whether you choose to deploy a root CA and then intermediate CAs associated with that root CA, is up to you.

Orderers and the transaction flow

Phase one: Proposal

We’ve seen from our topic on Peers that they form the basis for a blockchain network, hosting ledgers, which can be queried and updated by applications through smart contracts.

Specifically, applications that want to update the ledger are involved in a process with three phases that ensures all of the peers in a blockchain network keep their ledgers consistent with each other.

具体来说,应用程序想要更新账本需要涉及三个阶段的过程来确保区块链网络中的所有peer节点保持账本彼此一致。

In the first phase, a client application sends a transaction proposal to a subset of peers that will invoke a smart contract to produce a proposed ledger update and then endorse the results. The endorsing peers do not apply the proposed update to their copy of the ledger at this time. Instead, the endorsing peers return a proposal response to the client application. The endorsed transaction proposals will ultimately be ordered into blocks in phase two, and then distributed to all peers for final validation and commit in phase three.

在第一个阶段中,客户端应用程序将交易建议发送给一些peer节点,这些peer节点将调用智能合约以产生提议的账本更新,然后对结果进行背书。背书的peer节点此时不将建议的更新应用于其账本副本。相反,背书的对等方将提议响应返回到客户端应用程序。然后认可的交易建议将最终在第二阶段按顺序排列,然后分发给所有peer节点以进行最终验证并在第三阶段进行提交。

For an in-depth look at the first phase, refer back to the Peers topic.

Phase two: Ordering and packaging transactions into blocks

After the completion of the first phase of a transaction, a client application has received an endorsed transaction proposal response from a set of peers. It’s now time for the second phase of a transaction.

在完成交易的第一阶段之后,客户端应用程序已经接收到来自一群peer节点的已经背书的交易提议响应。***

In this phase, application clients submit transactions containing endorsed transaction proposal responses to an ordering service node. The ordering service creates blocks of transactions which will ultimately be distributed to all peers on the channel for final validation and commit in phase three.

在这个阶段,客户端应用程序提交包含已经背书的交易提议响应的交易到一个排序服务节点。排序服务创建包含交易的区块,这个区块最终将分发给通道上的所有peer节点,以进行最终验证并在第三阶段进行提交。

Ordering service nodes receive transactions from many different application clients concurrently. These ordering service nodes work together to collectively form the ordering service. Its job is to arrange batches of submitted transactions into a well-defined sequence and package them into blocks. These blocks will become the blocks of the blockchain!

排序服务节点同时接收来自许多不同应用程序客户端的交易。这些排序服务节点一起工作以共同形成排序服务。排序服务的工作是对提交过来的批量的交易安排一个明确定义的顺序,并将它们打包成区块。这些区块将成为区块链的区块!

The number of transactions in a block depends on channel configuration parameters related to the desired size and maximum elapsed duration for a block (BatchSize and BatchTimeout parameters, to be exact). The blocks are then saved to the orderer’s ledger and distributed to all peers that have joined the channel. If a peer happens to be down at this time, or joins the channel later, it will receive the blocks after reconnecting to an ordering service node, or by gossiping with another peer. We’ll see how this block is processed by peers in the third phase.

区块中的交易数量取决于通道配置参数,与区块的所需大小和最大经过时间有关(更精确的说是BatchSize 和 BatchTimeout 两个参数)。然后这个区块被保存到排序者的账本中并分发给加入到通道的所有peer节点。如果某个peer节点在这时发生了宕机或者在这以后加入到通道,他会在重新连接到排序服务节点之后收到此区块,或者通过gossiping从其他peer获取。

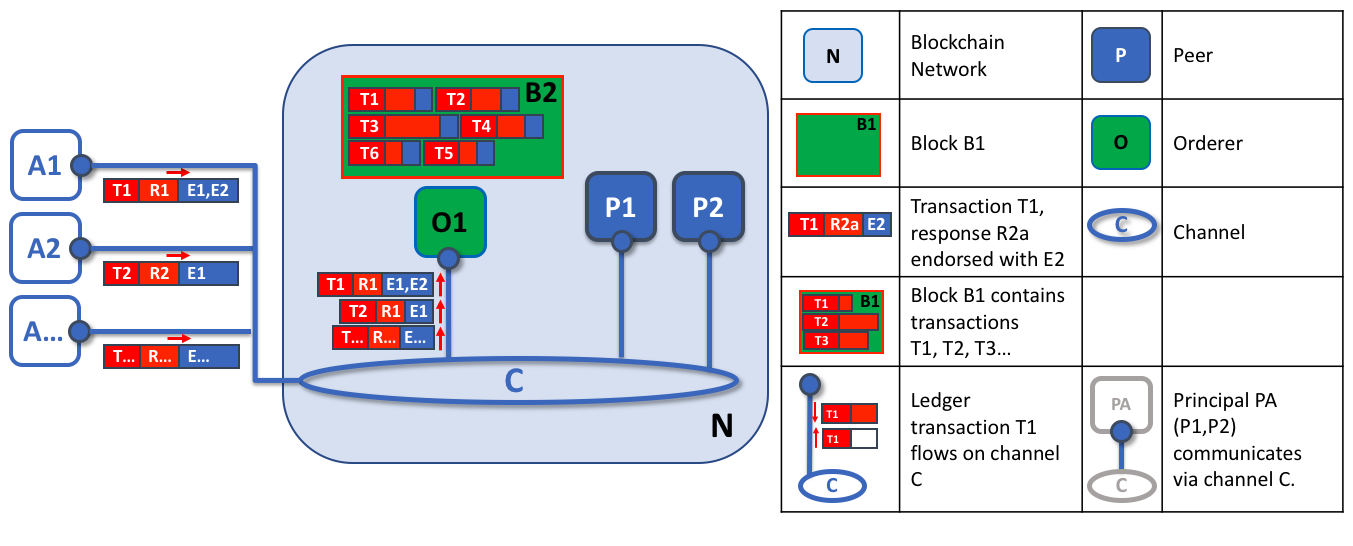

The first role of an ordering node is to package proposed ledger updates. In this example, application A1 sends a transaction T1 endorsed by E1 and E2 to the orderer O1. In parallel, Application A2 sends transaction T2 endorsed by E1 to the orderer O1. O1 packages transaction T1 from application A1 and transaction T2 from application A2 together with other transactions from other applications in the network into block B2. We can see that in B2, the transaction order is T1,T2,T3,T4,T6,T5 – which may not be the order in which these transactions arrived at the orderer! (This example shows a very simplified ordering service configuration with only one ordering node.)

排序节点的第一个角色时打包提议的账本更新。

It’s worth noting that the sequencing of transactions in a block is not necessarily the same as the order received by the ordering service, since there can be multiple ordering service nodes that receive transactions at approximately the same time. What’s important is that the ordering service puts the transactions into a strict order, and peers will use this order when validating and committing transactions.

值得注意的是,一个区块中的交易顺序不一定与排序服务所接收的订单顺序相同,因为可能有多个排序服务节点大约在同一时间接收交易。重要的是排序服务将交易置于严格的顺序中,并且peer节点在验证和提交交易时将使用该顺序。

个人理解:看第一遍时觉得多个排序服务节点收到不同的交易进行排序,这些排序服务是如何保持交易顺序的一致性的呢?如果把排序服务看作一个整体这个问题就解决了,不同的排序服务节点接收到交易之后还会对所有的接收到的交易做一个汇总,并不是每个排序服务节点都对交易进行排序。况且每个排序服务节点只是接收连接到这个排序服务节点的应用程序的交易,所以接收到的交易并不是整个通道上的所有的交易内容,因此肯定是不能够由排序服务节点进行单独排序的。

This strict ordering of transactions within blocks makes Hyperledger Fabric a little different from other blockchains where the same transaction can be packaged into multiple different blocks that compete to form a chain. In Hyperledger Fabric, the blocks generated by the ordering service are final. Once a transaction has been written to a block, its position in the ledger is immutably assured. As we said earlier, Hyperledger Fabric’s finality means that there are no ledger forks — validated transactions will never be reverted or dropped.

这种区块内的交易具有严格的顺序使Hyperledger Fabric和其他的区块链系统有一点不同,其他的区块链系统相同的交易可以被打包到不同区块中然后竞争形成一个链。在 Hyperledger Fabric中,由排序服务生成的区块是最终的。一旦一个交易被写入一个区块,它在账本中的位置就永远不变了。像我们之前说的, Hyperledger Fabric的最终性意味着没有账本分叉 – 经验证的交易将永远不会被回滚或丢弃。

We can also see that, whereas peers execute smart contracts and process transactions, orderers most definitely do not. Every authorized transaction that arrives at an orderer is mechanically packaged in a block — the orderer makes no judgement as to the content of a transaction (except for channel configuration transactions, as mentioned earlier).

我们还可以看到,peer节点执行智能合约并处理交易,而排序者绝对不会做这些。到达排序者的每笔已经授权交易以机械方式打包在一个区块中 – 排序者不对交易的内容做出判断(除了前面所述的通道配置交易)。

At the end of phase two, we see that orderers have been responsible for the simple but vital processes of collecting proposed transaction updates, ordering them, and packaging them into blocks, ready for distribution.

在第二阶段的最后,我们看到排序者负责简单但至关重要的过程,这些过程包括收集提议的交易更新,进行排序并将它们打包成区块以便分发。

Phase three: Validation and commit

The third phase of the transaction workflow involves the distribution and subsequent validation of blocks from the orderer to the peers, where they can be committed to the ledger.

Phase 3 begins with the orderer distributing blocks to all peers connected to it. It’s also worth noting that not every peer needs to be connected to an orderer — peers can cascade blocks to other peers using the gossip protocol.

Each peer will validate distributed blocks independently, but in a deterministic fashion, ensuring that ledgers remain consistent. Specifically, each peer in the channel will validate each transaction in the block to ensure it has been endorsed by the required organization’s peers, that its endorsements match, and that it hasn’t become invalidated by other recently committed transactions which may have been in-flight when the transaction was originally endorsed. Invalidated transactions are still retained in the immutable block created by the orderer, but they are marked as invalid by the peer and do not update the ledger’s state.

The second role of an ordering node is to distribute blocks to peers. In this example, orderer O1 distributes block B2 to peer P1 and peer P2. Peer P1 processes block B2, resulting in a new block being added to ledger L1 on P1. In parallel, peer P2 processes block B2, resulting in a new block being added to ledger L1 on P2. Once this process is complete, the ledger L1 has been consistently updated on peers P1 and P2, and each may inform connected applications that the transaction has been processed.

In summary, phase three sees the blocks generated by the ordering service applied consistently to the ledger. The strict ordering of transactions into blocks allows each peer to validate that transaction updates are consistently applied across the blockchain network.

For a deeper look at phase 3, refer back to the Peers topic.

Ordering service implementations

While every ordering service currently available handles transactions and configuration updates the same way, there are nevertheless several different implementations for achieving consensus on the strict ordering of transactions between ordering service nodes.

当前可用的每个排序服务都以相同的方式处理交易和配置更新,但是有几种不同的实现方式可用于在排序服务节点之间对严格的交易顺序达成共识。

For information about how to stand up an ordering node (regardless of the implementation the node will be used in), check out our documentation on standing up an ordering node.

-

Raft (recommended)

New as of v1.4.1, Raft is a crash fault tolerant (CFT) ordering service based on an implementation of Raft protocol in

etcd. Raft follows a “leader and follower” model, where a leader node is elected (per channel) and its decisions are replicated by the followers. Raft ordering services should be easier to set up and manage than Kafka-based ordering services, and their design allows different organizations to contribute nodes to a distributed ordering service.自v1.4.1起新增,Raft是基于

etcd的 Raft 协议 实现的故障容错排序服务。Raft遵从“领导者和跟随者”模型,这个模型是领导者节点(按通道)被选出并由跟随者复制其决策。Raft协议排序服务相较于基于Kafka的排序服务更容易设置和管理,其设计允许不同的组织将节点贡献给分布式排序服务。(个人理解最后一句话是说排序服务是由不同组织的排序服务节点组成) -

Kafka (deprecated in v2.x)

Similar to Raft-based ordering, Apache Kafka is a CFT implementation that uses a “leader and follower” node configuration. Kafka utilizes a ZooKeeper ensemble for management purposes. The Kafka based ordering service has been available since Fabric v1.0, but many users may find the additional administrative overhead of managing a Kafka cluster intimidating or undesirable.

与基于Raft的排序类似,Apache Kafka是使用“领导者和跟随者”节点配置的CFT实现。Kafka利用ZooKeeper集合进行管理。从Fabric v1.0开始提供基于Kafka的排序服务,但是许多用户可能会发现管理Kafka群集的额外管理开销令人生畏或不受欢迎。

-

Solo (deprecated in v2.x)

The Solo implementation of the ordering service is intended for test only and consists only of a single ordering node. It has been deprecated and may be removed entirely in a future release. Existing users of Solo should move to a single node Raft network for equivalent function.

排序服务的Solo实现仅用于测试,并且仅包含一个订购节点。它已被弃用,在将来的发行版中可能会完全删除。Solo的现有用户应移至单节点Raft网络以实现等效功能。

Raft

For information on how to configure a Raft ordering service, check out our documentation on configuring a Raft ordering service.

The go-to ordering service choice for production networks, the Fabric implementation of the established Raft protocol uses a “leader and follower” model, in which a leader is dynamically elected among the ordering nodes in a channel (this collection of nodes is known as the “consenter set”), and that leader replicates messages to the follower nodes. Because the system can sustain the loss of nodes, including leader nodes, as long as there is a majority of ordering nodes (what’s known as a “quorum”) remaining, Raft is said to be “crash fault tolerant” (CFT). In other words, if there are three nodes in a channel, it can withstand the loss of one node (leaving two remaining). If you have five nodes in a channel, you can lose two nodes (leaving three remaining nodes). This feature of a Raft ordering service is a factor in the establishment of a high availability strategy for your ordering service. Additionally, in a production environment, you would want to spread these nodes across data centers and even locations. For example, by putting one node in three different data centers. That way, if a data center or entire location becomes unavailable, the nodes in the other data centers continue to operate.

生产网络的首选排序服务实现方式,Fabric中已建立的Raft协议的实现是使用“领导者和跟随者”模型,其中领导者是在通道中的排序节点之间动态选举的(该节点集合称为“同意者集”),领导者将消息复制到跟随者节点。因为系统可以承受包括领导节点在内的节点的丢失,所以只要剩下大多数排序节点(所谓的“法定人数”)即可,因此Raft被称为“故障容错”(CFT)。换句话说,如果通道中有三个节点,那么它可以承受一个节点的损失(剩下两个活着的节点)。如果通道中有五个节点,则允许丢失两个节点(剩下三个剩余节点)。Raft排序服务的此功能是为您的排序服务建立高可用性策略的一个因素。此外,在生产环境中,您可能希望将这些节点分布在数据中心甚至本地。例如,通过将节点放置在三个不同的数据中心中。这样,如果一个数据中心或整个位置不可用,则其他数据中心中的节点将继续运行。

From the perspective of the service they provide to a network or a channel, Raft and the existing Kafka-based ordering service (which we’ll talk about later) are similar. They’re both CFT ordering services using the leader and follower design. If you are an application developer, smart contract developer, or peer administrator, you will not notice a functional difference between an ordering service based on Raft versus Kafka. However, there are a few major differences worth considering, especially if you intend to manage an ordering service:

从它们提供给网络或通道的服务的角度来看,Raft与现有的基于Kafka的排序服务(我们将在后面讨论)很相似。 它们都是采用领导者和跟随者设计的CFT排序服务。如果你是一个应用程序开发者、智能合约开发者或者是peer绩点管理员,您不会注意到基于Raft和Kafka的排序服务之间的功能差异。但是,有一些主要差异值得考虑,尤其是如果您打算管理排序服务:

-

Raft is easier to set up. Although Kafka has many admirers, even those admirers will (usually) admit that deploying a Kafka cluster and its ZooKeeper ensemble can be tricky, requiring a high level of expertise in Kafka infrastructure and settings. Additionally, there are many more components to manage with Kafka than with Raft, which means that there are more places where things can go wrong. And Kafka has its own versions, which must be coordinated with your orderers. With Raft, everything is embedded into your ordering node.

Raft的排序服务更容易搭建。

-

Kafka and Zookeeper are not designed to be run across large networks. While Kafka is CFT, it should be run in a tight group of hosts. This means that practically speaking you need to have one organization run the Kafka cluster. Given that, having ordering nodes run by different organizations when using Kafka (which Fabric supports) doesn’t give you much in terms of decentralization because the nodes will all go to the same Kafka cluster which is under the control of a single organization. With Raft, each organization can have its own ordering nodes, participating in the ordering service, which leads to a more decentralized system.

Kafka和Zookeeper并非是为了跨大型网络运行而设计的。

-

Raft is supported natively, which means that users are required to get the requisite images and learn how to use Kafka and ZooKeeper on their own. Likewise, support for Kafka-related issues is handled through Apache, the open-source developer of Kafka, not Hyperledger Fabric. The Fabric Raft implementation, on the other hand, has been developed and will be supported within the Fabric developer community and its support apparatus.

-

Where Kafka uses a pool of servers (called “Kafka brokers”) and the admin of the orderer organization specifies how many nodes they want to use on a particular channel, Raft allows the users to specify which ordering nodes will be deployed to which channel. In this way, peer organizations can make sure that, if they also own an orderer, this node will be made a part of a ordering service of that channel, rather than trusting and depending on a central admin to manage the Kafka nodes.

-

Raft is the first step toward Fabric’s development of a byzantine fault tolerant (BFT) ordering service. As we’ll see, some decisions in the development of Raft were driven by this. If you are interested in BFT, learning how to use Raft should ease the transition.

Raft是Fabric开发拜占庭式容错(BFT)排序服务的第一步。

Note: Similar to Solo and Kafka, a Raft ordering service can lose transactions after acknowledgement of receipt has been sent to a client. For example, if the leader crashes at approximately the same time as a follower provides acknowledgement of receipt. Therefore, application clients should listen on peers for transaction commit events regardless (to check for transaction validity), but extra care should be taken to ensure that the client also gracefully tolerates a timeout in which the transaction does not get committed in a configured timeframe. Depending on the application, it may be desirable to resubmit the transaction or collect a new set of endorsements upon such a timeout.

在已将回执确认发送给客户之后,Raft排序服务可能会丢失交易。例如,如果领导者大约在追随者提供回执确认的同时崩溃。因此,无论如何应用程序客户端都应在peer上侦听交易提交事件(以检查交易有效性),但应格外小心,以确保客户端也能容忍超时,在该超时中不会在配置的时间范围内提交事务。

Raft concepts

While Raft offers many of the same features as Kafka — albeit in a simpler and easier-to-use package — it functions substantially different under the covers from Kafka and introduces a number of new concepts, or twists on existing concepts, to Fabric.

尽管Raft提供了许多与Kafka相同的功能 – 尽管采用了更简单易用的软件包 – 它的功能与Kafka的表面大不相同,并为Fabric引入了许多新概念或扭曲现有的概念。

Log entry. The primary unit of work in a Raft ordering service is a “log entry”, with the full sequence of such entries known as the “log”. We consider the log consistent if a majority (a quorum, in other words) of members agree on the entries and their order, making the logs on the various orderers replicated.

Raft排序服务的主要工作单元是 “log entry”,这些条目的完整序列称为“log”。如果多数成员(换言之,为法定人数)同意条目及其顺序,则我们认为日志是一致的,使日志复制到各个排序者上。

Consenter set. The ordering nodes actively participating in the consensus mechanism for a given channel and receiving replicated logs for the channel. This can be all of the nodes available (either in a single cluster or in multiple clusters contributing to the system channel), or a subset of those nodes.

同意者集。排序节点积极参与给定通道的共识机制,并接收该通道的复制日志。这可以是所有可用节点(在单个群集中或在组成系统通道的多个群集中),也可以是那些节点的子集。

Finite-State Machine (FSM). Every ordering node in Raft has an FSM and collectively they’re used to ensure that the sequence of logs in the various ordering nodes is deterministic (written in the same sequence).

有限状态机。Raft中的每个排序节点都有一个FSM,并共同使用它们来确保各个排序节点中的日志顺序是确定的(以相同的顺序写入)。

Quorum. Describes the minimum number of consenters that need to affirm a proposal so that transactions can be ordered. For every consenter set, this is a majority of nodes. In a cluster with five nodes, three must be available for there to be a quorum. If a quorum of nodes is unavailable for any reason, the ordering service cluster becomes unavailable for both read and write operations on the channel, and no new logs can be committed.

法定人数。描述需要确认提议以便可以排序交易的同意者的最小数量。对于每个同意集,这是大多数节点。在一个由五个节点组成的集群中,必须有三个节点可用才能达到法定人数。如果由于任何原因无法达到法定数量的节点,排序服务群集将无法用于通道上的读取和写入操作,并且无法提交任何新日志。

Leader. This is not a new concept — Kafka also uses leaders, as we’ve said — but it’s critical to understand that at any given time, a channel’s consenter set elects a single node to be the leader (we’ll describe how this happens in Raft later). The leader is responsible for ingesting new log entries, replicating them to follower ordering nodes, and managing when an entry is considered committed. This is not a special type of orderer. It is only a role that an orderer may have at certain times, and then not others, as circumstances determine.

领导者。 这不是一个新概念 – 正如我们所说的,Kafka也使用领导者 – 但至关重要的是要了解,在任何给定时间,通道的同意者集会选举一个节点作为领导者(我们稍后将描述在Raft中如何发生)。领导者负责获取新的日志条目,将它们复制到跟随者排序节点,并管理何时将条目视为已提交。这不是排序者的特殊类型。这只是排序者可能在某些时候扮演的角色,***。

Follower. Again, not a new concept, but what’s critical to understand about followers is that the followers receive the logs from the leader and replicate them deterministically, ensuring that logs remain consistent. As we’ll see in our section on leader election, the followers also receive “heartbeat” messages from the leader. In the event that the leader stops sending those message for a configurable amount of time, the followers will initiate a leader election and one of them will be elected the new leader.

追随者。了解追随者的关键是追随者从领导者那里接收日志并确定性地复制它们,以确保日志保持一致。正如我们在领导者选举部分中所看到的那样,追随者还会从领导者那里接收“心跳”消息。如果领导者在可配置的时间内停止发送这些消息,则跟随者将发起领导者选举,其中一个将被选举为新领导者。

Raft in a transaction flow

Every channel runs on a separate instance of the Raft protocol, which allows each instance to elect a different leader. This configuration also allows further decentralization of the service in use cases where clusters are made up of ordering nodes controlled by different organizations. While all Raft nodes must be part of the system channel, they do not necessarily have to be part of all application channels. Channel creators (and channel admins) have the ability to pick a subset of the available orderers and to add or remove ordering nodes as needed (as long as only a single node is added or removed at a time).

每个通道都运行一个单独的Raft协议实例,这允许每个实例选择不同的领导者。在由不同组织控制的排序节点组成的群集用例中,此配置还允许进一步分散服务。尽管所有Raft节点都必须是系统通道的一部分,但不一定必须是所有应用程序通道的一部分。通道创建者(和通道管理员)可以选择可用订购者的子集,并根据需要添加或删除订购节点(只要一次仅添加或删除一个节点)。

While this configuration creates more overhead in the form of redundant heartbeat messages and goroutines, it lays necessary groundwork for BFT.

虽然此配置以冗余心跳消息和goroutine的形式创建了更多开销,但为BFT奠定了必要的基础。

In Raft, transactions (in the form of proposals or configuration updates) are automatically routed by the ordering node that receives the transaction to the current leader of that channel. This means that peers and applications do not need to know who the leader node is at any particular time. Only the ordering nodes need to know.

在Raft中,交易(以建议书或配置更新的形式)由接收交易的排序节点自动路由到该通道的当前leader节点。这意味着peer节点和应用程序节点不需要知道当前时间谁是leader节点。只有排序服务节点需要知道。

When the orderer validation checks have been completed, the transactions are ordered, packaged into blocks, consented on, and distributed, as described in phase two of our transaction flow.

Architectural notes

How leader election works in Raft

Although the process of electing a leader happens within the orderer’s internal processes, it’s worth noting how the process works.

Raft nodes are always in one of three states: follower, candidate, or leader. All nodes initially start out as a follower. In this state, they can accept log entries from a leader (if one has been elected), or cast votes for leader. If no log entries or heartbeats are received for a set amount of time (for example, five seconds), nodes self-promote to the candidate state. In the candidate state, nodes request votes from other nodes. If a candidate receives a quorum of votes, then it is promoted to a leader. The leader must accept new log entries and replicate them to the followers.

Raft的节点一直处于三种状态之一:follower,candidate(候选),或leader。所有的节点的最初状态都是follower。在这种状态下,他们可以从leader接收日志条目,或者投票选举leader。如果在设置的时间段内(例如,五秒钟)未接收到日志条目或心跳,则节点会自动升级为 candidate 状态。在候选状态下,节点会向其他节点请求投票。如果候选人获得法定人数的选票,则将其晋升为 leader。leader节点必须接受新的日志条目并且将其复制到followers。

For a visual representation of how the leader election process works, check out The Secret Lives of Data.

Snapshots

If an ordering node goes down, how does it get the logs it missed when it is restarted?

如果一个排序节点宕机了,它如何在重新启动时获取丢失的日志信息呢?

While it’s possible to keep all logs indefinitely, in order to save disk space, Raft uses a process called “snapshotting”, in which users can define how many bytes of data will be kept in the log. This amount of data will conform to a certain number of blocks (which depends on the amount of data in the blocks. Note that only full blocks are stored in a snapshot).

尽管可以无限期保留所有日志,但是为了节省磁盘空间,Raft使用了一种称为“snapshotting”的程序,用户可以在其中定义将在日志中保留多少字节的数据。此数据量将符合一定数量的区块(这取决于块中的数据量,请注意,快照中仅存储完整块)。

For example, let’s say lagging replica R1 was just reconnected to the network. Its latest block is 100. Leader L is at block 196, and is configured to snapshot at amount of data that in this case represents 20 blocks. R1 would therefore receive block 180 from L and then make a Deliver request for blocks 101 to 180. Blocks 180 to 196 would then be replicated to R1 through the normal Raft protocol.

举个例子,假设滞后的副本R1刚刚重新连接到网络。它的最后一个区块是100.Leader节点L最后一个区块是196,并且设置快照的数量代表20个区块。因此,R1将从L接收区块180个,然后对区块101到180发出 Deliver 请求。180到196之间的区块会使用常规的Raft协议复制到R1。

Kafka (deprecated in v2.x)

The other crash fault tolerant ordering service supported by Fabric is an adaptation of a Kafka distributed streaming platform for use as a cluster of ordering nodes. You can read more about Kafka at the Apache Kafka Web site, but at a high level, Kafka uses the same conceptual “leader and follower” configuration used by Raft, in which transactions (which Kafka calls “messages”) are replicated from the leader node to the follower nodes. In the event the leader node goes down, one of the followers becomes the leader and ordering can continue, ensuring fault tolerance, just as with Raft.

The management of the Kafka cluster, including the coordination of tasks, cluster membership, access control, and controller election, among others, is handled by a ZooKeeper ensemble and its related APIs.

Kafka clusters and ZooKeeper ensembles are notoriously tricky to set up, so our documentation assumes a working knowledge of Kafka and ZooKeeper. If you decide to use Kafka without having this expertise, you should complete, at a minimum, the first six steps of the Kafka Quickstart guide before experimenting with the Kafka-based ordering service. You can also consult this sample configuration file for a brief explanation of the sensible defaults for Kafka and ZooKeeper.

To learn how to bring up a Kafka-based ordering service, check out our documentation on Kafka.